Authors: Richard Osuala, Daniel M. Lang, Anneliese Riess, Georgios Kaissis, Zuzanna Szafranowska, Grzegorz Skorupko, Oliver Diaz, Julia A. Schnabel, Karim Lekadir

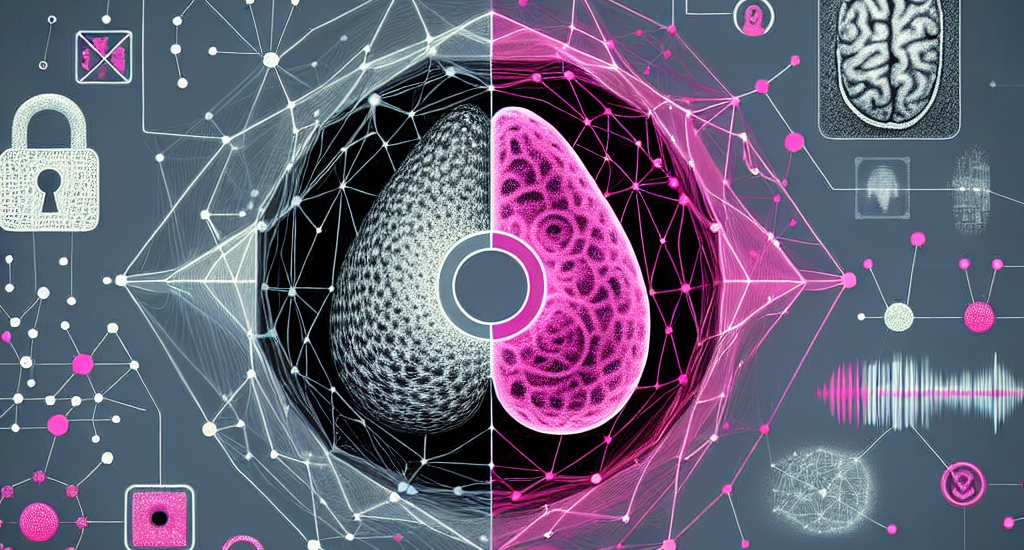

Abstract: Deep learning holds immense promise for aiding radiologists in breast cancer

detection. However, achieving optimal model performance is hampered by

limitations in availability and sharing of data commonly associated to patient

privacy concerns. Such concerns are further exacerbated, as traditional deep

learning models can inadvertently leak sensitive training information. This

work addresses these challenges exploring and quantifying the utility of

privacy-preserving deep learning techniques, concretely, (i) differentially

private stochastic gradient descent (DP-SGD) and (ii) fully synthetic training

data generated by our proposed malignancy-conditioned generative adversarial

network. We assess these methods via downstream malignancy classification of

mammography masses using a transformer model. Our experimental results depict

that synthetic data augmentation can improve privacy-utility tradeoffs in

differentially private model training. Further, model pretraining on synthetic

data achieves remarkable performance, which can be further increased with

DP-SGD fine-tuning across all privacy guarantees. With this first in-depth

exploration of privacy-preserving deep learning in breast imaging, we address

current and emerging clinical privacy requirements and pave the way towards the

adoption of private high-utility deep diagnostic models. Our reproducible

codebase is publicly available at https://github.com/RichardObi/mammo_dp.

Source: http://arxiv.org/abs/2407.12669v1