Authors: Yifan Gong, Zheng Zhan, Yanyu Li, Yerlan Idelbayev, Andrey Zharkov, Kfir Aberman, Sergey Tulyakov, Yanzhi Wang, Jian Ren

Abstract: Good weight initialization serves as an effective measure to reduce the

training cost of a deep neural network (DNN) model. The choice of how to

initialize parameters is challenging and may require manual tuning, which can

be time-consuming and prone to human error. To overcome such limitations, this

work takes a novel step towards building a weight generator to synthesize the

neural weights for initialization. We use the image-to-image translation task

with generative adversarial networks (GANs) as an example due to the ease of

collecting model weights spanning a wide range. Specifically, we first collect

a dataset with various image editing concepts and their corresponding trained

weights, which are later used for the training of the weight generator. To

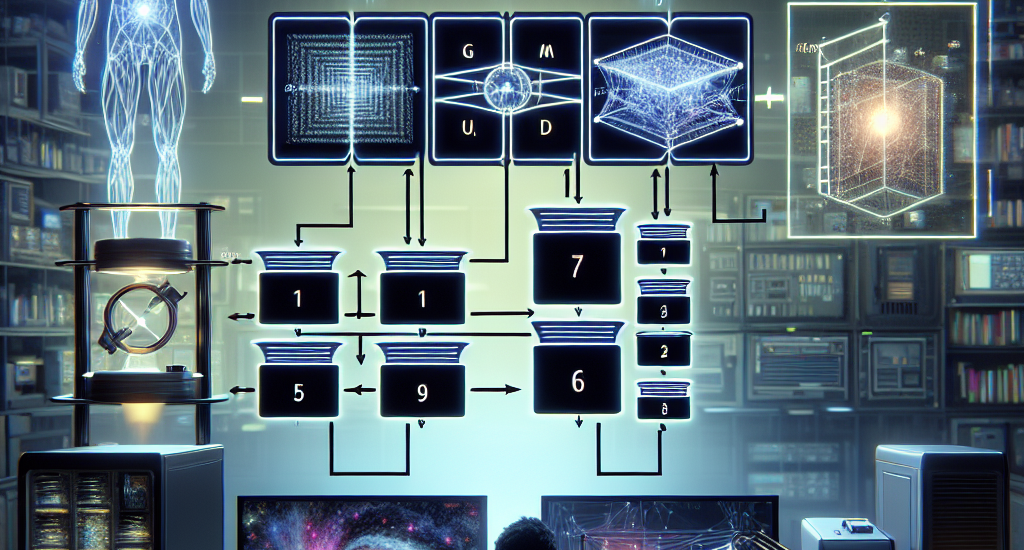

address the different characteristics among layers and the substantial number

of weights to be predicted, we divide the weights into equal-sized blocks and

assign each block an index. Subsequently, a diffusion model is trained with

such a dataset using both text conditions of the concept and the block indexes.

By initializing the image translation model with the denoised weights predicted

by our diffusion model, the training requires only 43.3 seconds. Compared to

training from scratch (i.e., Pix2pix), we achieve a 15x training time

acceleration for a new concept while obtaining even better image generation

quality.

Source: http://arxiv.org/abs/2407.11966v1